2025-03-25

•Sam Reed

Look Alive! (Part 1)

A theory on the cause of the AI Industry's sharp turn to focus on agents.

Next Steps

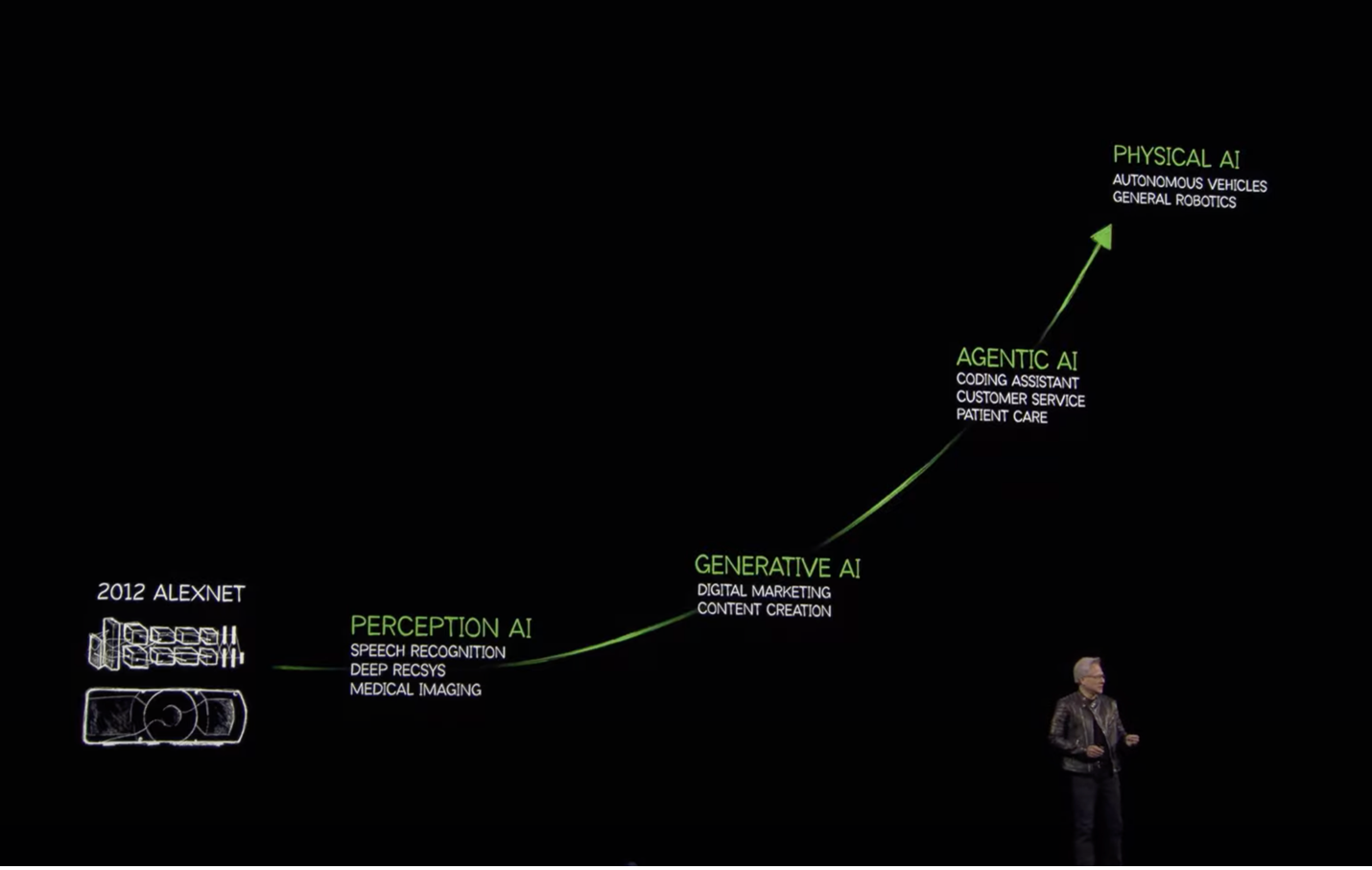

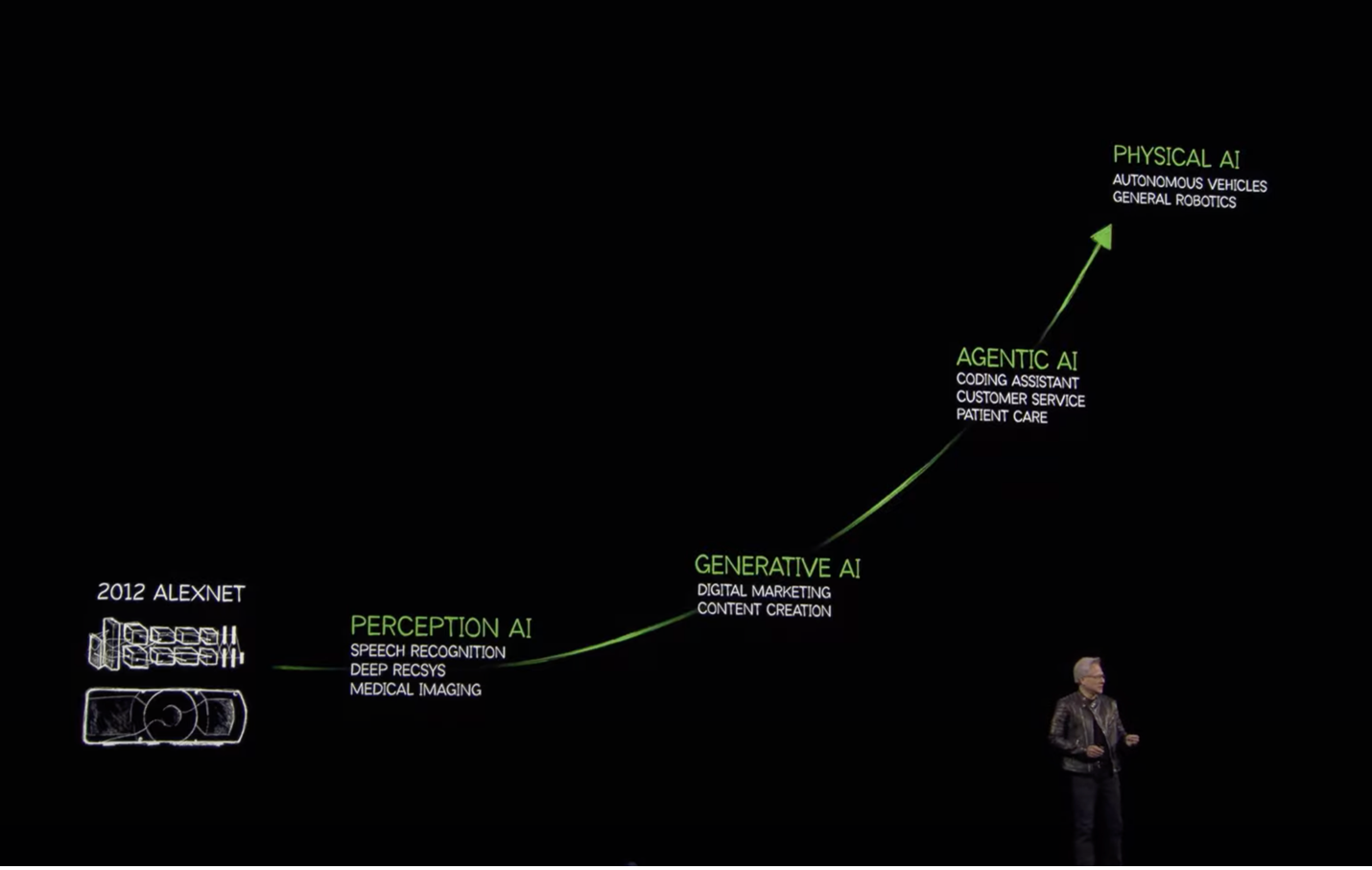

Last week, I tuned in for a bit of NVIDIA’s GTC March 2025 Keynote, presented by NVIDIA CEO Jensen Huang.

Starting at the 8:05 mark of the speech, a statement was made that caught my attention. Huang, when discussing how AI is transforming computing, said the following:

“[In] the last several years…[a] major breakthrough happened…we call it ‘Agentic AI.’ Agentic AI basically means that you have an AI that has agency.”

The question of whether terms like “AI Agents” and “Agentic AI” are intended to reference the idea of human agency has been on my mind for a while now, so hearing Huang explicitly state that, at least from his perspective, “Agentic AI” does indeed pay homage to the human concept (to be fair, he didn’t include the “Human” qualifier in his remark), was somewhat of a confirmatory moment for me.

The Governor(s)

Not without fear of stating the obvious, it’s important to note that there is no governing body behind the rapidly hardening AI (LLM) industry lexicon (contrast this with something like the accounting industry, which has governing bodies like the Financial Accounting Standards Board), so “Official” definitions for common industry terms like “AI Agents” don’t really exist. However, there very obviously is an unofficial governing body of AI jargon, which of course consists of leading AI companies, high-profile subject matter experts, and social media influencers (the lines between these groups often blur). These are the entities to which others (like me!) pay attention; these are the entities who are leading the current conversation around AI.

The term “Vibe coding” that we discussed a bit last week is a great example of a prominent influencer’s ability to manipulate the LLM lexicon with a single post on X. The term comes from a tweet by Andrej Karpathy, an OpenAI founder, former Tesla AI leader and household name in present-day AI thought leadership. In the few weeks since the video, the term has exploded across many social media platforms, to the point where it’s harder to avoid content with “Vibe coding” in the title than to find it (I need to acknowledge that my various social media algorithms are tuned to serve me lots of AI content, so the reader’s experience of the world these days is probably different than mine).

The reason that I bring this up is not to do another piece on vibe coding, but instead as a reminder of the powerful ability of the entities who have managed to establish credibility in the LLM space (at this point) to create and diffuse terminology. This isn’t an inherently bad thing, but it does unfortunately create the conditions for a self-reinforcing cycle of misinterpretation if not taken seriously. So, the fact that we’re seeing alignment around the “AI Agent” term by Anthropic, OpenAI, NVIDIA (mentioned above), Y Combinator, and tons of other notable voices in the online tech & AI world, and that a prominent voice (Jensen Huang) within this group has recently provided us with a concise definition of the term, makes me feel like taking a deeper look at the term is warranted.

A Penny For Your Thoughts

Dr. Iain McGilchrist (who sadly is not featured enough in online tech circles) might be able to help us out with our analysis.

McGilchrist, when discussing the importance of metaphor in his book The Master and His Emissary (a treasure) says the following:

“Language functions like money. It is only an intermediary. But like money it takes on some of the life of the things it represents. It begins in the world of experience and returns to the world of experience – and it does so via metaphor, which is a function of the [brain’s] right hemisphere and is rooted in the body. To use a metaphor, language is the money of thought.”

Language is the money of thought. It enters and exits the world of experience through metaphor. What does this mean?

In my interpretation, Iain is saying that language, like money, is a mechanism that allows us humans to think and communicate in a way that is temporally and physically decoupled from the present moment (like how money allows us to store value in a way that is decoupled from physical assets). In fact, many of us get so caught up in this decoupled world of language that practices like mindfulness meditation, which is designed to suppress our inner monologue and bring us back to the present moment, seem to be getting more and more common in popular culture.

Iain goes on:

“…to lead us out of the web of language, to the lived world, ultimately to something that can be pointed to…Everything has to be expressed in terms of something else, and those something elses eventually have to come back to the [human] body. To change the metaphor…that is where someone’s spade reaches bedrock and is turned.”

I think that this statement makes the point even clearer: Dr. McGilchrist is making the case that human language is a combinatorially explosive system of metaphors that, for each of us, begins with the body. This sounds a bit strange at first, but it makes sense: every person experiences the world from a body, so the body seems as stable a bedrock on which to build our understanding of the world as anything else (note that I’ve been grappling with these ideas for a while and still find them challenging!).

If we accept Dr. McGilchrist’s idea about the fundamental importance of metaphor in language (and learning), than we should also feel comfortable claiming that the successful communication (and teaching) of a new idea is the process of finding the right metaphor for one’s audience, whether that metaphor is a succinct and concise anthropomorphism, like…I don’t know…the term “Agentic AI,” or a more abstract web of concepts organized into a baggy, 1100 word essay, like…well, anyways.

This is why I find setting “AI Agents” as the desired outcome of LLMs to be so worthy of a closer look. If metaphor is so essential to our understanding of the world, and a group of industry leading entities all start to communicate using the same metaphor, and that metaphor is so close to the body that it nearly touches the bedrock of our knowledge, and that metaphor is being used to describe a nascent, breakthrough technology, we might just end up with some strange times ahead.

TO BE CONTINUED NEXT WEEK (I have more to say in part 2 but I’m attempting to keep these to ~1000 words).

See you then!